These tools and metrics are designed to help AI actors develop and use trustworthy AI systems and applications that respect human rights and are fair, transparent, explainable, robust, secure and safe.

Filters

SUBMIT A TOOL USE CASE

If you have a tool use case that you think should be featured in the Catalogue of Tools & Metrics for Trustworthy AI, we would love to hear from you!

SUBMIT

Resaro: Evaluating the performance & robustness of an AI system for Chest X-ray (CXR) assessments

A healthcare organisation in Singapore engaged Resaro to evaluate the feasibility of deploying a commercial AI solution for evaluating Chest X-ray (CXR) images.

Resaro’s Bias Audit: Evaluating Fairness of LLM-Generated Testimonials

A government agency based in Singapore engaged Resaro’s assurance services to ensure that a LLM-based testimonial generation tool is unbiased with respect to gender and race and in-line with the agency’s requirements.

Resaro’s Performance and Robustness Evaluation: Facial Recognition System on the Edge

Resaro evaluated the performance of third-party facial recognition (FR) systems that run on the edge, in the context of assessing vendors’ claims about the performance and robustness of the system in highly dynamic operational conditions.

FairNow: Conversational AI And Chatbot Bias Assessment

FairNow's chatbot bias assessment provides a way for chatbot deployers to test for bias. This bias evaluation methodology relies on the generation of prompts (messages sent to the chatbot) that are realistic and representative of how individuals interact with the chatbot.

FairNow: Regulatory Compliance Implementation and the NIST AI RMF / ISO Readiness

FairNow's platform simplifies the process of managing compliance for the NIST AI Risk Management Framework, ISO 42001, ISO 23894, and other AI laws and regulations worldwide. Organisations can use the FairNow platform to identify which standards, laws, and regulations apply based on their AI adoption and manage the set of activities necessary to ensure compliance.

FairNow: NYC Bias Audit With Synthetic Data (NYC Local Law 144)

New York City's Local Law 144 has been in effect since July 2023 and was the first law in the US to require bias audits of employers and employment agencies who use AI in hiring or promotion. FairNow's synthetic bias evaluation technique creates synthetic job resumes that reflect a wide range of jobs, specialisations and job levels so that organisations can conduct a bias assessment with data that reflects their candidate pool.

Credo AI Transparency Reports: Facial Recognition application

A facial recognition service provider required trustworthy Responsible AI fairness reporting to meet critical customer demand for transparency. The service provider used Credo AI’s platform to provide transparency on fairness and performance evaluations of its identity verification service to its customers.

Credo AI Policy Packs: Human Resources Startup compliance with NYC LL-144

Credo AI's Policy Pack for NYC LL-144 encodes the law’s principles into actionable requirements and adds a layer of reliability and credibility to compliance efforts.

Credo AI Governance Platform: Reinsurance provider Algorithmic Bias Assessment and Reporting

A global provider of reinsurance used Credo AI’s platform to produce standardised algorithmic bias reports to meet new regulatory requirements and customer requests.

Advai: Regulatory Aligned AI Deployment Framework

The adoption of AI systems in high-risk domains demands adherence to stringent regulatory frameworks to ensure safety, transparency, and accountability. This use case focuses on deploying AI in a manner that not only meets performance metrics but also aligns with regulatory risks, robustness, and societal impact considerations.

Advai: Implementing a Risk-Driven AI Regulatory Compliance Framework

As AI becomes central to organisational operations, it is crucial to align AI systems and models with emerging regulatory requirements globally. This use case focuses on integrating a risk-driven approach, based on and aligning with ISO 31000 principles, to assess and mitigate risks associated with AI implementation.

Advai: Assurance of Computer Vision AI in the Security Industry

Advai’s toolkit can be applied to assess the performance, security and robustness of an AI model used for object detection. Systems require validation to ensure they can reliably detect various objects within challenging visual environments.

Advai: Robustness Assurance Framework for Guardrail Implementation in Large Language Models (LLMs)

To assure and secure LLMs up to the standard needed for business adoption, Advai provides a robustness assurance framework designed to test, detect, and mitigate potential vulnerabilities.

Advai: Streamlining AI Governance with Advai Insight for Enhanced Robustness, Risk Management and Compliance

Advai Insight is a platform for enterprises that transform complex AI risks and robustness metrics into digestible, actionable insights for non-technical stakeholders.

Advai: Operational Boundaries Calibration for AI Systems via Adversarial Robustness Techniques

To enable AI systems to be deployed safely and effectively in enterprise environments, there must be a solid understanding of their fault tolerances in response to adversarial stress-testing methods.

Advai: Advanced Evalution of AI-Powered Identity Verification Systems

The project introduces an innovative method to evaluate identity verification vendors' AI systems, crucial to online identity verification, which goes beyond traditional sample image dataset testing.

Advai: Robustness Assessment of a Facial Verification System Against Adversarial Attacks

Advai were involved in evaluating the resilience of a facial verification system used for authentication, namely in the context of preventing image manipulation and ensuring robustness against adversarial attacks.

Shakers' AI Matchmaking System

Shakers' AI matchmaking tool connects freelancers and projects by analyzing experiences, skills, and behaviours, ensuring precise personal and professional talent-client matches within its vast community.

Higher-dimensional bias in a BERT-based disinformation classifier

Application of the bias detection tool on a self-trained BERT-based disinformation classifier on the Twitter1516 dataset

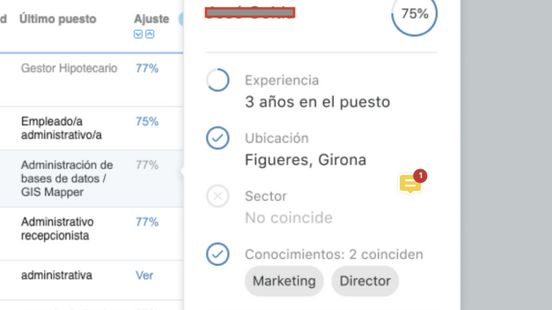

How You Match functionality in InfoJobs

In Infojobs, the information available in the resumées of the candidates and in the posted job offers are used to compute a matching score between a job seeker and a given job offer. This matching score is called ‘How you Match’, and is currently being used in multiple user touchpoints in Infojobs, the leading job board in Spain.